Stop Using AI to Write Your Content (Here's Why)

Before I get into why I’m against using AI for content creation, let me be clear: I’m not against AI itself. I have no problem using AI tools, automation, or language models during the content creation process. They’re excellent for research, competitor analysis, and helping writers work faster. AI can be a great assistant—like having a research agent that summarizes what competitors have said and analyzes information quickly.

What I’m against is relying on AI to actually create your content.

The Google Reality Check

Let’s look back at 2023 when Google introduced the Helpful Content Update. Search engines exist to provide the best results to users. Google, as the biggest search engine, spends massive amounts on crawling, analyzing, and improving its AI to assess content quality. When AI-generated content started flooding the web, it raised Google’s costs to crawl and analyze all of it.

That’s why they introduced the Helpful Content Update—one of the most important updates in recent years. The core idea is simple: content should create value and be helpful. But why is helpfulness so important now? Because we already have thousands of articles on general topics. The real question is whether new content is necessary, or if it brings a new point of view or experience that didn’t exist before.

Think about it this way: if I write about the calories in eggs, is it really needed? Will it change anything or bring something new? Usually not. Value comes when what we publish brings a new perspective or experience. Google wants to know if the writer has the needed experience, because a real expert will have a unique viewpoint. That’s when new value is created, and that’s when content has a better chance to be seen.

Why AI Content Fails

Here’s the fundamental problem: large language models are trained on existing content and can’t create something truly new. They rearrange what already exists based on prompts. Creation is what matters now, and AI can’t go beyond its training to do real research or trial and error, especially in areas like YMYL (Your Money or Your Life) topics and specialized fields.

As we move through 2025, concepts from E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) and the Search Quality Rater Guidelines are more important than ever.

The Hype vs. Reality

There’s a hype among company managers and stakeholders who fear they’re falling behind if they don’t use AI. They get excited thinking they can publish lots of content and believe they’re being smart. Some inexperienced people even switch to “AI Content Engineer” roles, thinking they can generate impressive results. But in reality, they’re just producing repetitive content with different wording.

My question to them is: how much new value are you really creating? Can you be honest with your audience and tell them this was AI-generated? Can you claim a real expert wrote it? These are the exact questions you’ll find in Google’s helpful content guidelines.

Real-World Failures

We’re seeing the consequences play out in real time. Let me share some concrete examples:

1- The “SEO Heist”

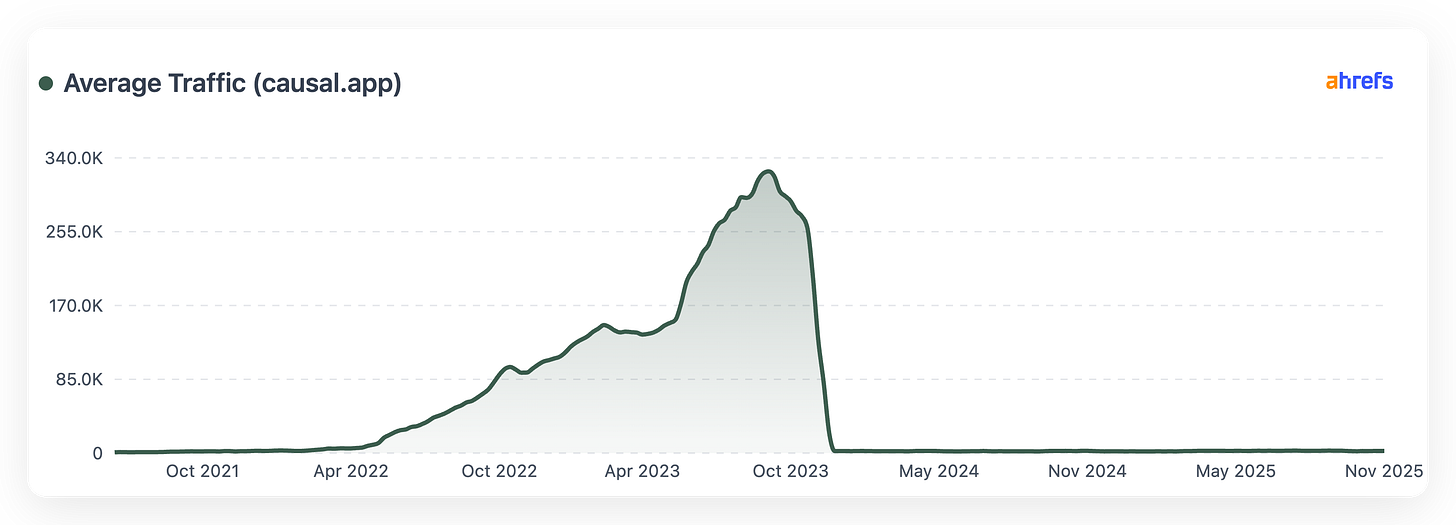

That Backfired In late 2023, a startup called Casual tried something that seemed clever at first. They looked at their competitor Exceljet—a site with thousands of well-structured articles about Excel formulas—and decided to copy their approach using AI.

Here’s what they did:

Exported Exceljet’s complete sitemap

Converted the URLs into AI prompts

Generated about 1,800 articles in hours

Published everything immediately

Initially, it seemed to work. Traffic surged from near-zero to 490,000 monthly visits. But then the operator bragged about it on Twitter, calling it “stealing” traffic. That got Google’s attention.

The site was hit with a “Pure Spam” manual action. Traffic plummeted from 610,000 visits to under 200,000, then eventually to almost nothing. The deep pages they’d generated were completely deindexed—dropping to just a single page in Google’s index.

2- The 22,000-Page Disaster

Another example is TailRide (formerly GetInvoice). They tried using programmatic SEO with AI to shortcut their growth. They generated over 22,000 pages targeting random long-tail keywords like “forever 21 return policy” and “dual digital option”—topics completely unrelated to their invoicing software.

At first, they saw millions of impressions and hundreds of clicks. But on February 28, 2024, their traffic dropped to zero overnight. The penalty was so severe they had to abandon their entire brand and domain, rebranding from GetInvoice to TailRide.

3- The “325 Posts Per Day” Case

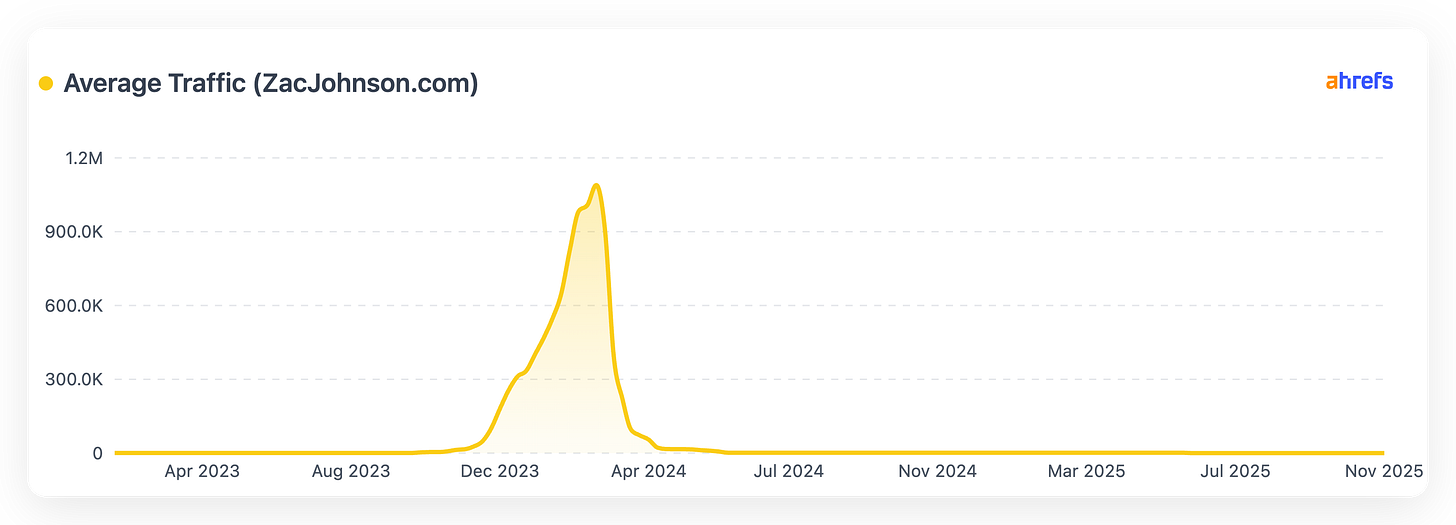

One of the most extreme examples is ZacJohnson.com. Between September 2023 and March 2024, this site published over 60,000 articles—averaging about 325 posts per day. Let that sink in: 325 articles every single day.

The site shifted from legitimate marketing advice to covering everything celebrity gossip, “best-of” lists, trending news basically anything with search volume. They used AI to mass-produce content on topics completely outside their expertise, hoping to capture broad traffic for ad revenue.

The result? Traffic collapsed from approximately 8.2 million monthly visits to zero. The entire site was deindexed.

Other Examples

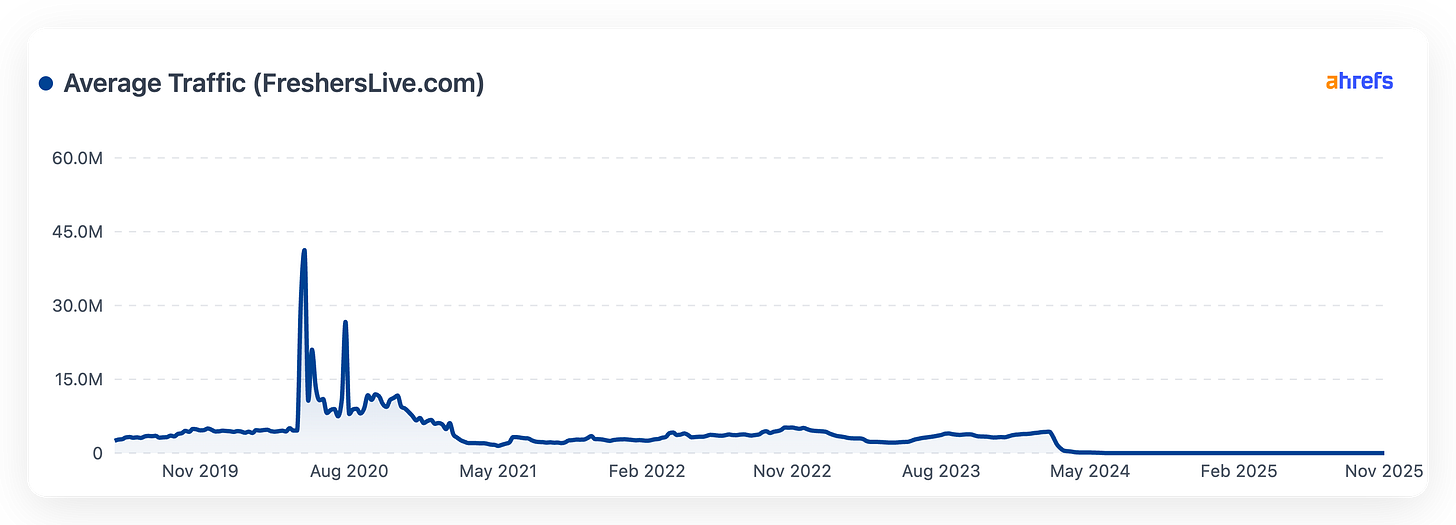

FreshersLive.com:

Lost approximately 10 million monthly visits when deindexed

EquityAtlas.org:

Dropped from 4 million monthly visitors to near zero

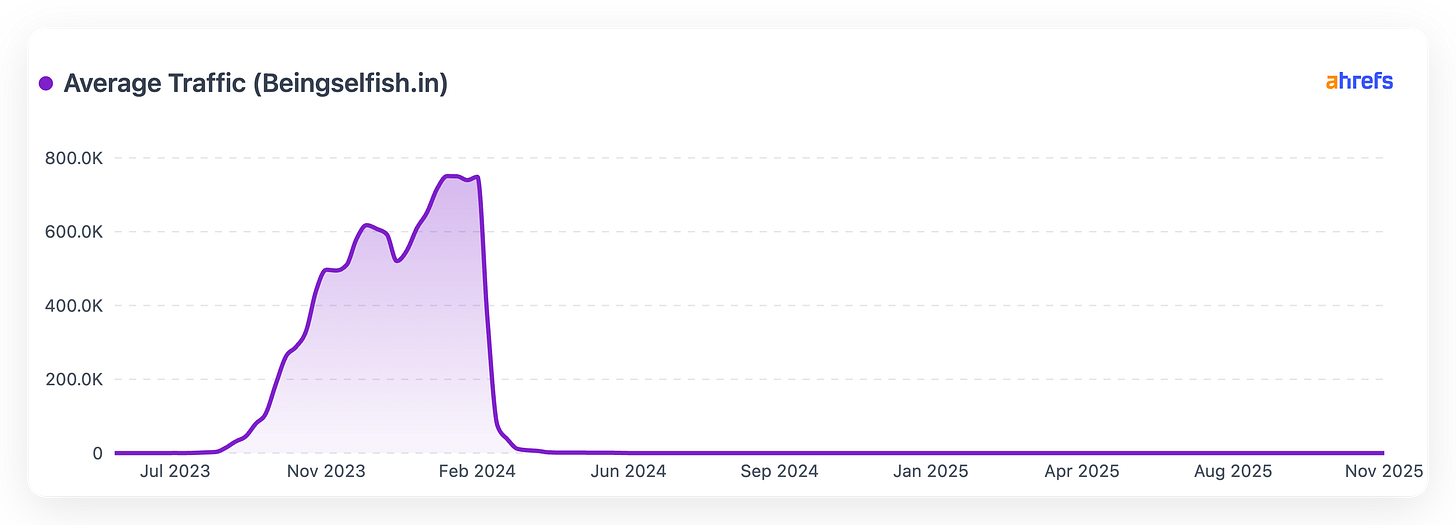

Beingselfish.in:

Fell from 1 million+ visits to zero

Google’s New Definition of Spam

In March 2024, Google fundamentally changed its spam policies. They introduced the concept of “Scaled Content Abuse,” defining it as “generating many pages for the primary purpose of manipulating Search rankings and not helping users”—regardless of whether it’s done by AI, humans, or a combination.

This definition focuses on intent and value rather than method. Even if a human edits every AI article, if the intent is to flood Google with low-value content to capture traffic, it’s spam.

The Trust Problem

Here’s what many people miss: Google’s “Helpful Content” system creates a site-wide signal. If you have 100 excellent human-written articles and 5,000 AI-generated filler pages, Google identifies your entire domain as “mostly unhelpful.” This negative signal suppresses the rankings of all your pages, including the good ones.

This is why simply deleting bad content (called “pruning”) is often required for recovery—the bad content actively drags down the good content.

My Bottom Line

That’s the main reason I’m against AI content creation. We’re in a game where if you don’t create real value, you shouldn’t be seen. AI should be a helper in your process for research, analysis, and organization. But the actual creation needs to come from real experience and expertise.

When you publish AI-generated content at scale, you’re not just risking a ranking drop. You’re risking:

Complete deindexing from Google

Manual penalties that can permanently damage your domain

Loss of trust that spreads across your entire website

Having to abandon your brand and start over

The sites that are succeeding now are the ones that can prove a human expert actually created the content, brought real experience to the topic, and added something new to the conversation.

If you can’t honestly tell your audience that a real expert with genuine experience wrote your content, then you shouldn’t publish it. It’s that simple.

This is a great article thanks

Great as always. So helpful. Thank you!